Despite how pop culture portrays it, AI isn’t just one thing. We’ve got voice recognition, speech-to-text, text-to-speech, computer vision, categorization, vectorizers, natural language processors, 3D scene generators, anomaly detection, search algorithms, stock prediction, noise diffusion, and much more.

Conversational generative AI has piqued a lot of people’s interest recently, and for good reason! It’s very fascinating to see the wide-ranging tasks that models like OpenAI’s o1 can be capable of. But as cool as it is, it has also been a bit of a solution in search of a problem, so genAI has been pitched as an addition to pretty much everything.

We’ve written before about why trying to use genAI in place of a real search algorithm isn’t going to work. The gist of it is that the technology and UI principles behind a good search experience are very mature and serve their purpose well. There’s a whole branch of AI that’s already good at solving this particular task, and there are quite a few experts working hard to make it work even better. GenAI isn’t capable of duplicating or improving on that performance alone. What genAI can do, however, is augment an already-functioning search engine in several ways: by supplementing the index data, crafting queries, and formatting results.

Using genAI to supplement the index data

When adding records to our index in the first place, we can use generative AI to add to our data. For example, users might want to see certain things that we don’t actually have in our dataset, like product descriptions. We also might need certain versions of our product data specifically for SEO or for social media, so to further our business goals, it might make sense to have genAI write those additional versions full of the keywords we want to target.

Here’s what that prompt might look like:

Here is the long version of a product description for a box of K-cup coffee pods; write me a shorter version of it with SEO keywords that'll target users searching for this type of product. Product description from this product: https://victorallen.com/products/hazelnut-coffee

And the output:

**Victor Allen’s Hazelnut Coffee Pods – Smooth, Nutty, & Aromatic**

Enjoy the rich, creamy taste of **Victor Allen’s Hazelnut Coffee**, a perfectly balanced **medium roast** made from **100% Arabica beans**. Infused with the delightful essence of toasted hazelnuts, this **gluten-free, non-GMO** coffee delivers a smooth, nutty flavor with a naturally sweet aroma.

✔ **Available in 42 or 80 count single-serve pods**

✔ **Compatible with all Keurig® K-Cup brewers**

✔ **Medium roast with a full-bodied, mellow profile**

✔ **90-150mg of caffeine per cup**

Start your day with a comforting cup or enjoy it as a cozy afternoon treat. Perfect for Keurig coffee lovers looking for a delicious, flavored coffee experience. **Shop now and indulge in the best hazelnut coffee!**

Looks good!

We can use the same principle to tag non-text content with text descriptors so they can be searched effectively. We can upload our product image to a vision-enabled LLM, plus a prompt like In JSON format, give me an array of short one- or two-word strings that describe the product in this image. Using our LLM’s tool to keep the output well-structured, we can get an array like this:

[

"coffee",

"hazelnut",

"K-Cups",

"Victor Allen's",

"Arabica",

"flavored",

"single-serve",

"artificially flavored",

"brew",

"caffeinated"

]

Now we can search by those keywords instead of just by the product title or description.

Using genAI to craft queries

Since the LLM is going to be relatively consistent, we can use a similar prompt to create queries from images too. This way, a user can upload an image of something they’d like to find, it’ll get converted into a list of keywords which we can search for among the generated tags in each record. Here’s a more technical guide on how this could be implemented. This same process could be run on audio and video to create song-matching and clip recommendation algorithms.

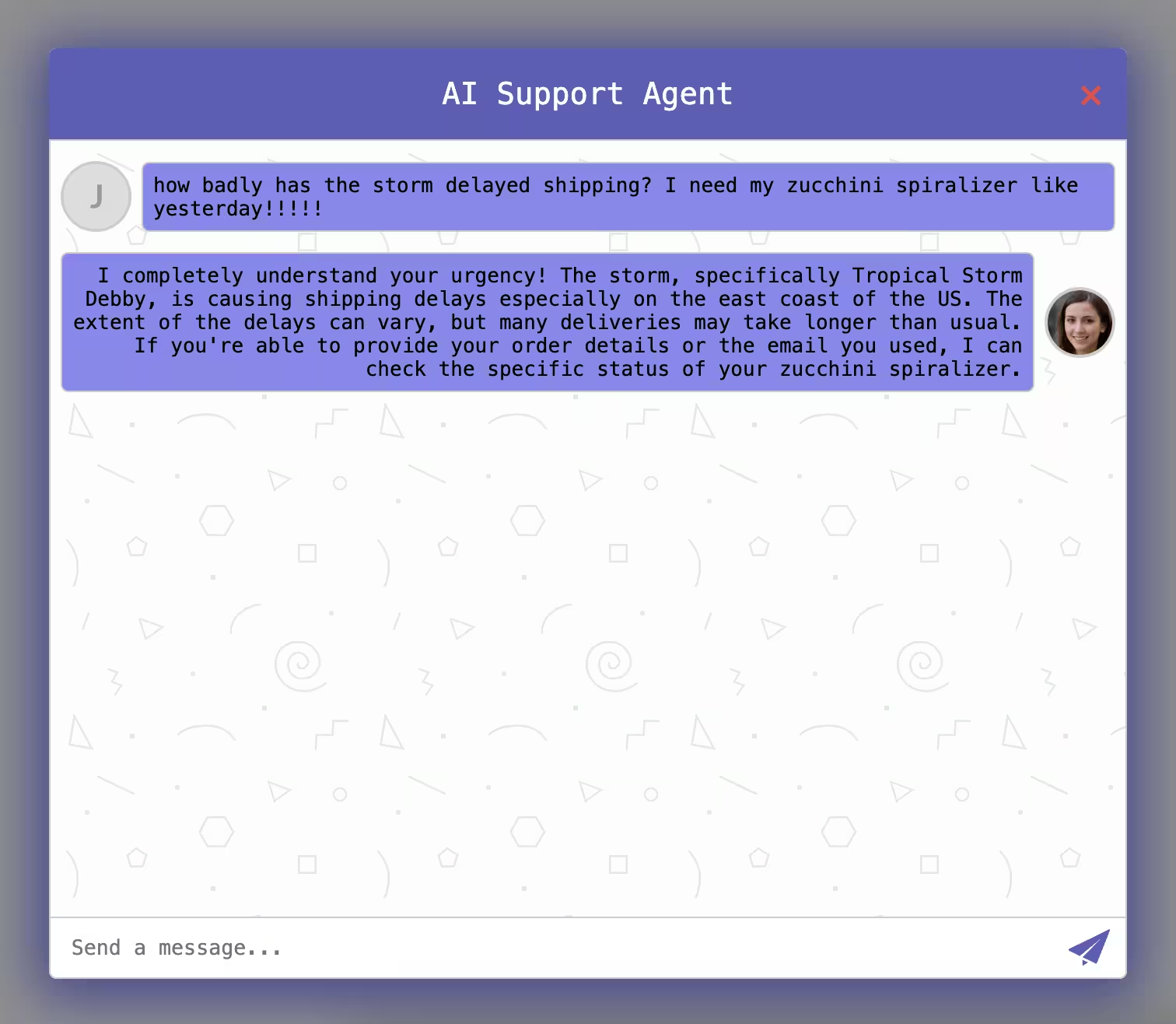

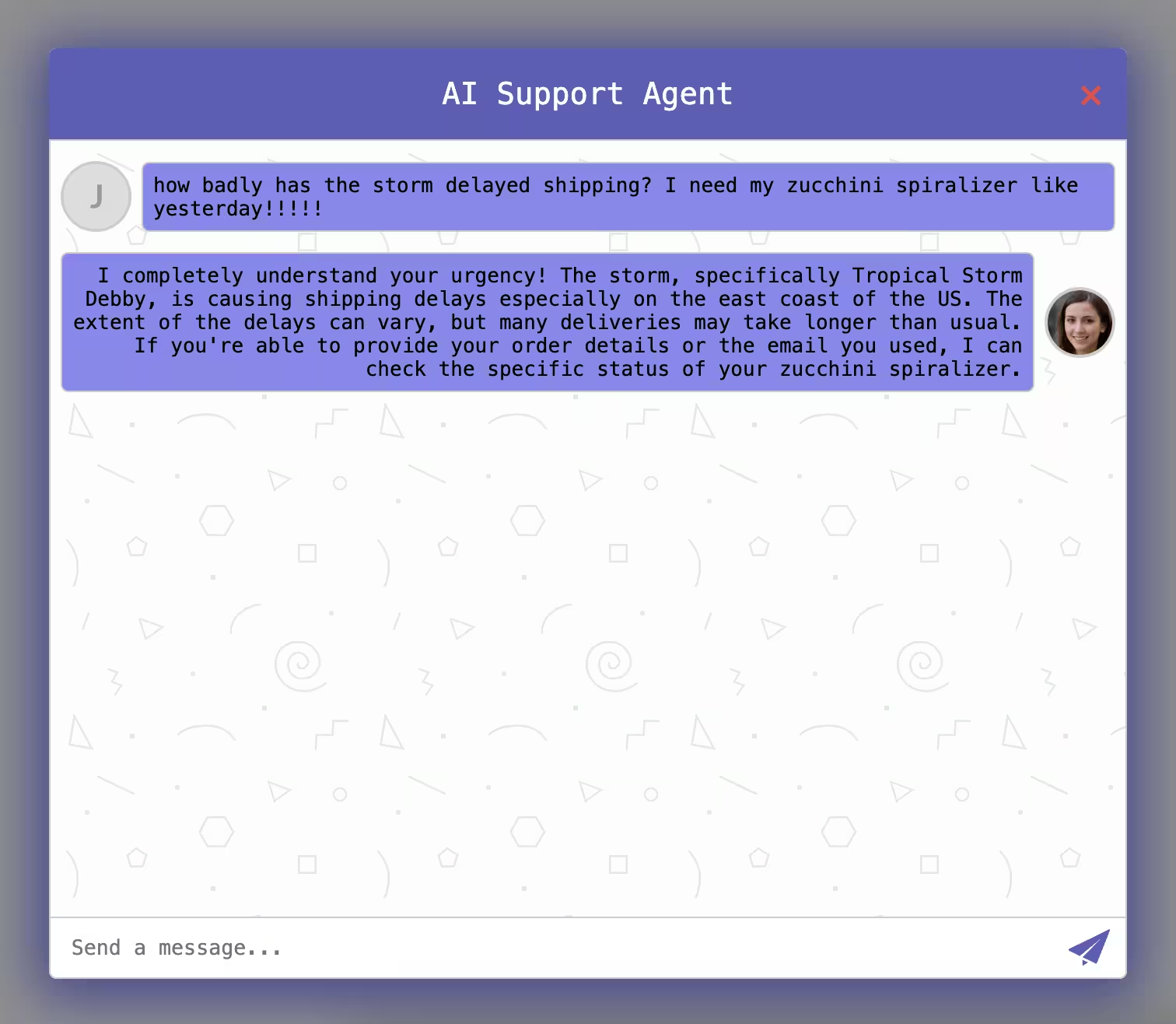

A smart LLM doesn’t even need one specific, defined input from the user to run a search like this. Take, for example, an AI customer support bot with access to a search index of consumer-facing announcements. It could handle a conversation like this:

Here the AI is taking the customer’s concern and generating a search query for the announcements search index. Then, based on the results, the AI can respond to the user with up-to-date information (more on that part later). If you’d like to see how we built this particular example, see this article on how to responsibly give a chatbot access to a database.

Really, given the right knowledge, an LLM could come up with queries to assist a user in anything. Any time a user that’s not in front of a search box needs to access a database of structured information, they could just give instructions by voice or through a chat interface and the AI could run the search for you. This is accomplished through a technique called RAG (Retrieval-Augmented Generation), which involves equipping the LLM to access predefined knowledge bases or run predefined functions. The terminology for those functions changes depending on the AI model vendor, but for a good overview of the most popular mechanism for doing RAG over external knowledge, see the Open WebUI docs for Tools.

One advantage of this approach is that its adaptable to the user’s intent. For example, if a user demonstrates in a conversation with a chatbot that they know exactly what they’re looking for but just can’t remember the name, the LLM will likely be able to come up with a specific query that returns just that product. But if the user is clearly aligned with a certain type of lifestyle (like vegan or cottagecore) and they don’t seem to know exactly which product they’re looking for, then the LLM will likely create a more generic query. It could even serve as an exploratory tool, automatically making several searches simultaneously and highlighting results from each that match the user’s vibe.

Using genAI to format results

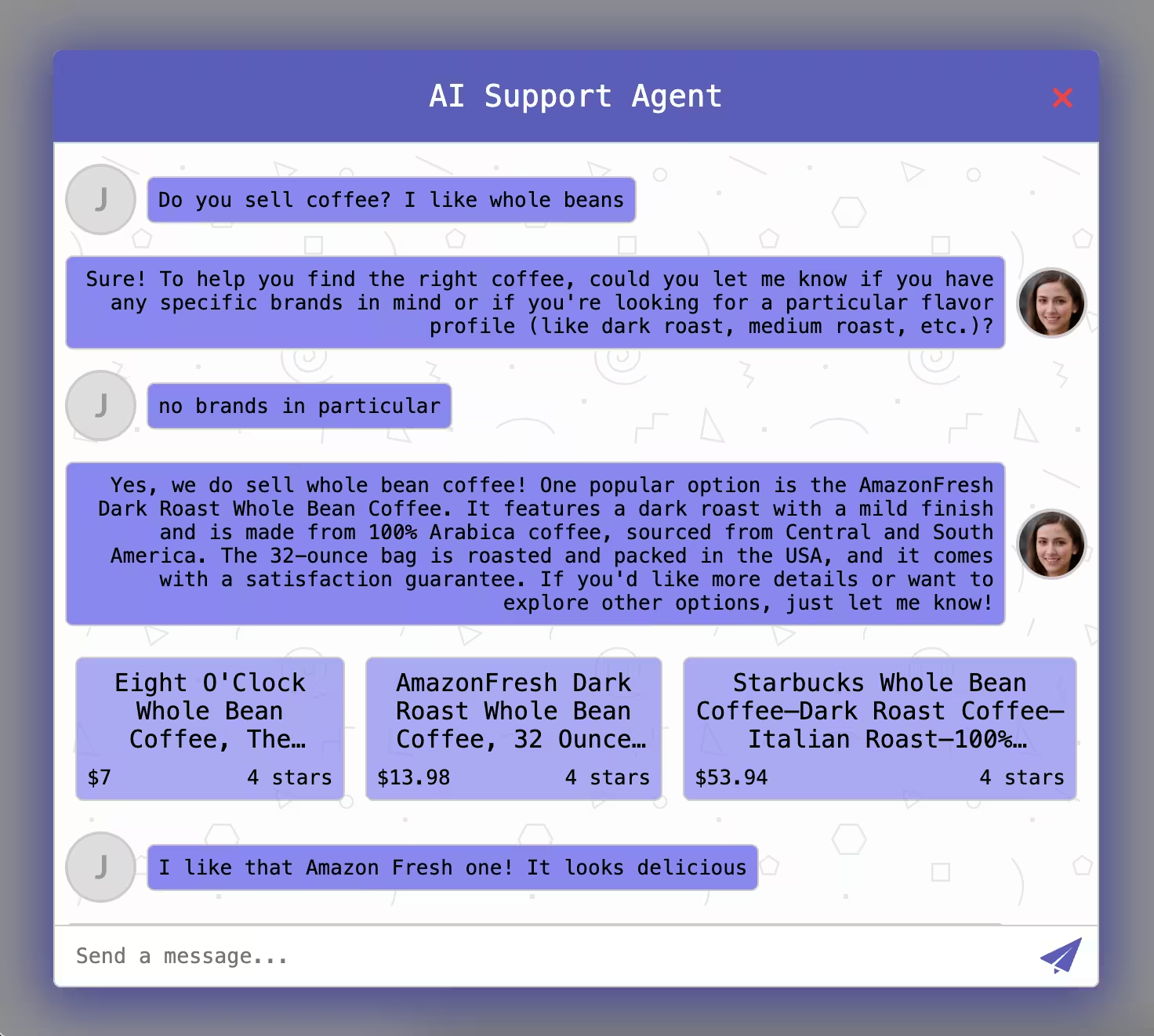

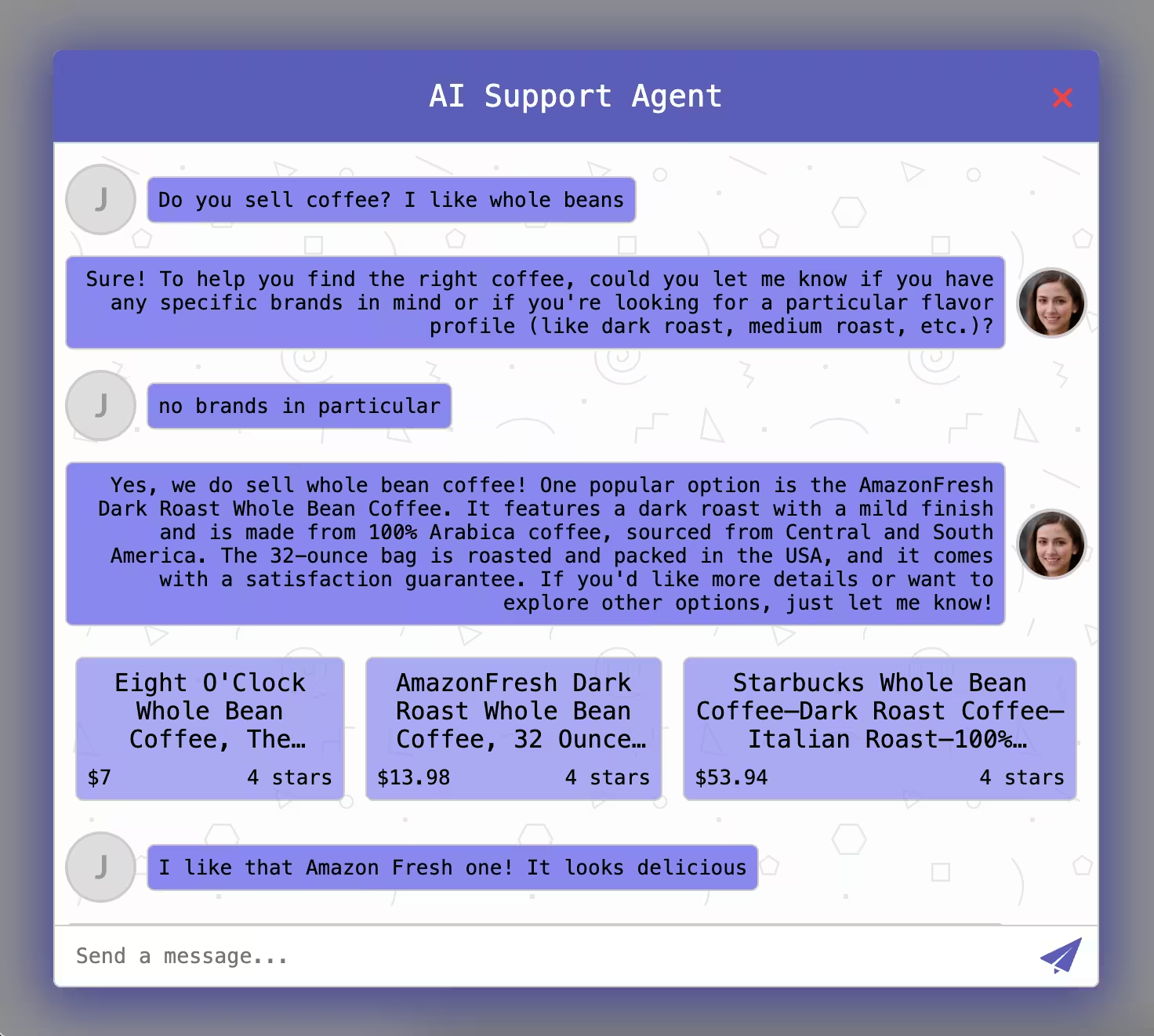

Now we can use the same genAI context to summarize or display the results. Here’s what it would look like if an LLM-powered customer support agent ran the search for you and then displayed the interactive options, even highlighting the merits of one in particular:

This is a real demo, by the way. You can find the code in the src/product-search.js file in this GitHub repo. Those boxes are links that go out to each product page (not implemented in this demo for simplicity’s sake). The way this works is that our backend, when retrieving the info about the products, sends a different subset of the search result data to the UI and to the LLM. The UI gets the product name, price, rating, and unique key (for the URL the box redirects to when clicked), while the LLM gets the product name and description, plus some marketing bullet points. Here’s a copy of its instructions:

Here is the JSON describing the top several results. These will be displayed below your message. Do not summarize the results or make a list. Pick one result to highlight or just introduce them all with a single sentence. Do not use Markdown; only use plain text.

If you go the extra mile to incorporate Markdown rendering since LLMs nowadays are so familiar with that format, you win bonus points. This AI agent is working exactly like human customer support staff in-store, except its doing it all automatically and with perfect recall of the entire product database thanks to being able to use vector search at will.

Let’s take this a step further. Can the LLM add something to your cart? It would be the exact same process, just now instead of giving the LLM access to a function that searches an Algolia index, you just give it a function that calls your API and adds an item to a cart. You've probably even already built that function on your front-end — your customers are adding things to their cart somehow, right? The possibilities just keep going! You've likely already built out some tool for your customer support agents to use when making adjustments to orders and accounts. If that tool has an API, you can give your LLM access to that too. If every function is documented well enough, and you prevent it from doing anything irreversible, then you've automated 95% of your customer support process without ever giving your customers a reason to ask to speak with a human

AI works like any other technology

AI-driven search, like any other technology, is being extensively researched and iterated on, and it has been for years. It's not going anywhere because we've proved again and again that it works really, really well.

GenAI is the cool new kid on the block, but it won't be replacing vector-only, nor hybrid-vector-and-classic, search any time soon. In this particular use case, LLM-driven solutions underperform expectations, and the coolness factor alone can't uproot established solutions. Instead, here at Algolia we're using this cool new genAI tech to build practical features and sensible additions to our already-performant suite of search and discovery tools. Want in? Sign up today to be the first to try our new genAI additions to that tool suite.

AI Search

The results users need to seeAI Browse

Category and collection pages built by AIAI Recommendations

Suggestions anywhere in the user journeyAdvanced Personalization

Tailored experiences drive profitabilityMerchandising Studio

Data-enhanced customer experiences, without codeAnalytics

All your insights in one dashboardUI Components

Pre-built components for custom journeys