We're back from ECIR25, the 47th European Conference on Information Retrieval, held in the beautiful medieval city of Lucca, Italy. After four intense days of research presentations, workshops, poster sessions, and industry discussions, our team of six Algolia engineers returned with heads full of insights and a renewed excitement for the direction our field is heading.

Starting the conference on the IMT Campus in Lucca, Italy

This year's ECIR felt different from previous editions. Where past conferences might have been dominated by theoretical advances and "proof-of-concept" demonstrations, ECIR25 showcased systems that are already running in production, serving millions of users, and solving real business problems. Personally, I could feel a different energy than last years: researchers and practitioners alike seemed to sense we're at an inflection point where advanced IR techniques are finally ready for broad industrial adoption.

What's New in 2025: From Lab to Production 🚀

A striking difference from previous years was the maturity of the solutions being presented. We noticed three major shifts:

Conversational search moved from workshop sideshows to center stage. Where previous ECIRs treated conversational interfaces as an interesting research direction, this year they were framed as a crucial part of the user experience evolution. The main conference tracks featured robust systems handling multi-turn conversations, managing context across sessions, and gracefully handling the complex linguistic constructs (anaphoras, implicitness, …) that make human conversation so natural yet challenging for machines.

RAG systems graduated from prototypes to production frameworks. The Retrieval-Augmented Generation presentations are not anymore “novel architecture showcases”: now the talks on RAG focused on standardizing evaluation methodologies, systematic approaches to context utilization, and frameworks for ensuring factual accuracy at scale. The RURAGE (Robust Universal RAG Evaluator) framework is an example of this shift, empowering practitioners with concrete tools for continuous QA performance testing of production RAG systems.

Multimodal becomes mainstream. Text-only search feels increasingly antiquated when systems can seamlessly work with images, audio, and structured data. Multimodal retrieval and semantic image understanding are becoming important capabilities that complement traditional text search. We can see a gap between the multimodal implementations of ~2020 (mostly research toys) and the current state of the industry, where understanding content beyond textual modality becomes crucial to stay relevant.

The Hybrid Retrieval Revolution: keyword + semantic = stellar search ⚡

If there was one technical consensus that emerged from ECIR25, it's that the old "dense vs. sparse" debate is officially over. Hybrid approaches have won, and it's not even close.

Multiple presentations demonstrated that combining traditional lexical matching (sparse retrieval) with semantic understanding (dense retrieval) consistently outperforms either approach alone. The reasons are now well understood: sparse methods excel at precise keyword matching and handle out-of-vocabulary terms gracefully, while dense approaches capture semantic nuances and enable cross-lingual retrieval.

We could look at evidence from systems that are already deployed at scale. Teams shared real-world results, proving measurable improvements when hybrid architectures replaced single-method implementations. It’s lovely to see these how theoretical improvements translate to business results: beyond the lab benchmarks, we can see impact on actual user engagement and task completion rates.

The QuReTeC (Query Resolution by Term Classification) approach by Nikos Voskarides (University of Amsterdam), Dan Li, Pengjie Ren, Evangelos Kanoulas, and Maarten de Rijke showcased how sophisticated query understanding can bridge conversational context with traditional retrieval.

By modeling query resolution as a binary classification task, QuReTeC demonstrates how to systematically decide which terms from conversation history to include in the current query. This enables focused conversations even over long timelines, which will be crucial for the next generation of personal assistants and production conversational search systems.

Conversational Search: going from Chat towards true Dialogue 💬

The Conversational Search and Recommenders workshop led by Guglielmo Faggioli, Nicola Ferro, and Simone Merlo (University of Padua) was a highlight of the conference for me, diving deep into what it actually means to build conversational systems that feel natural and helpful, not just reactive.

The key insight that emerged? Successful conversational search requires mixed initiative. The system should be an active participant in the conversation, not just a passive responder.

This means proactively asking clarifying questions, surfacing related information users might not have thought to ask for, and gracefully handling the complex linguistic phenomena that characterize human dialogue.

The workshop presented approaches for handling many NLU challenges:

- Anaphoras: References that depend on previous context ("Tell me about Tesla" → "Who founded it?")

- Ellipsis: Omitted words understood from context ("Weather today?" → "And tomorrow?")

- Topic shifts: When conversations naturally move to new subjects

- Topic returns: When users circle back to earlier discussion points (have you notice how ChatGPT recently improved in this aspect over long-context conversations?)

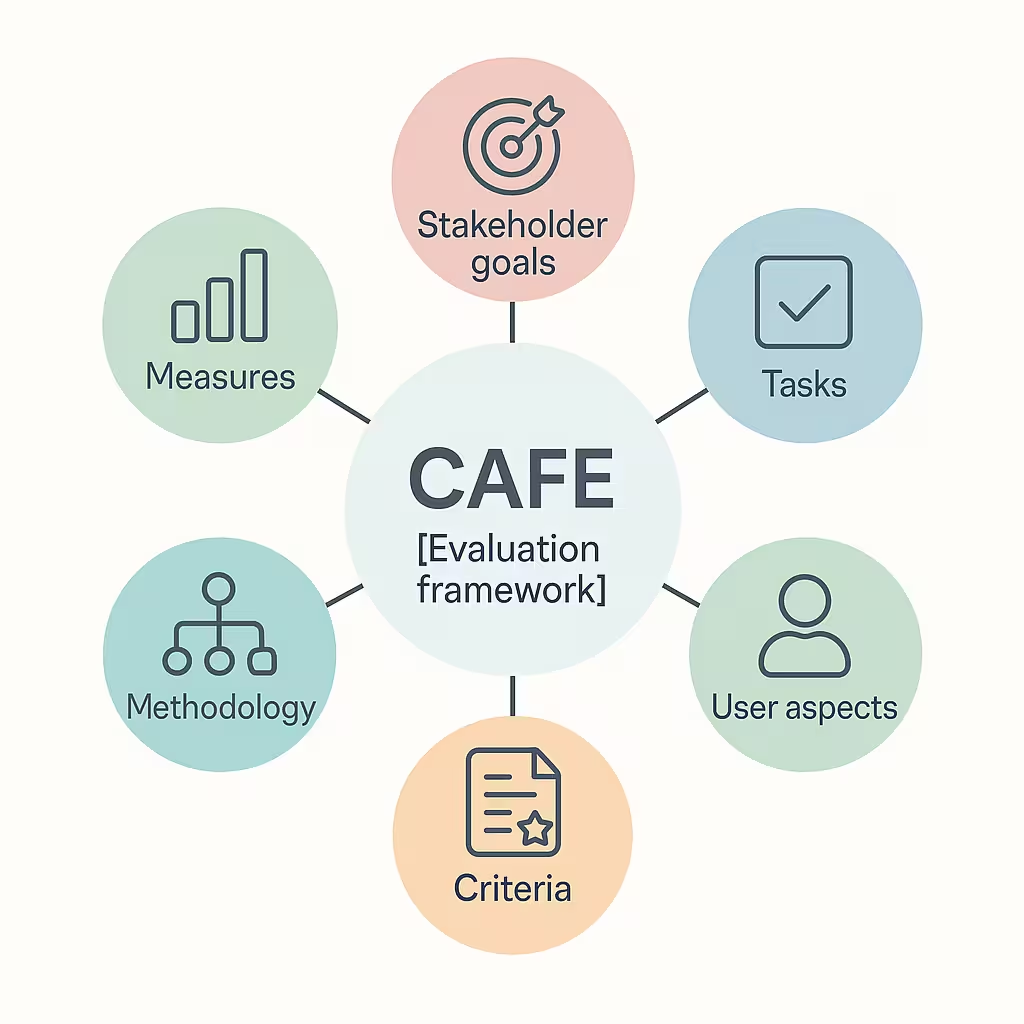

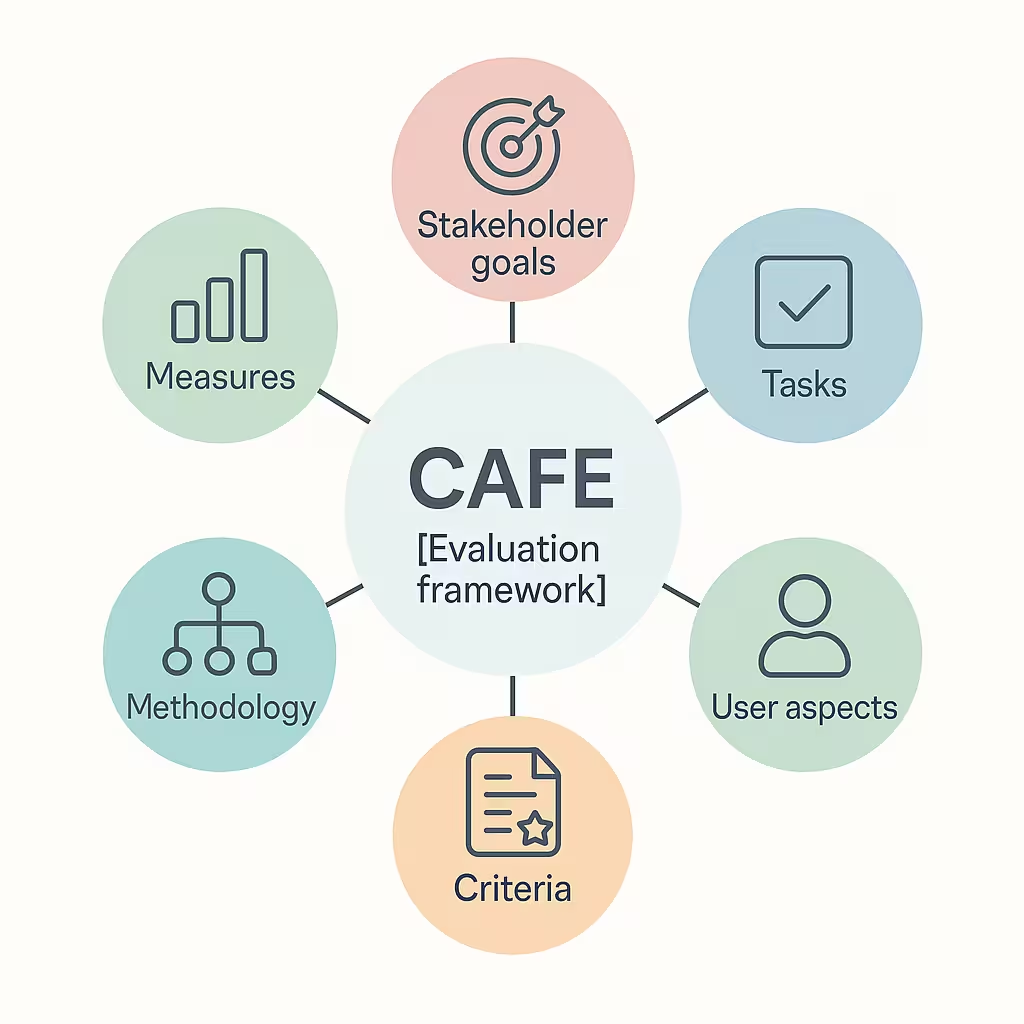

It was nice to see a big emphasis on how we evaluate the quality of conversational experiences. The newly developed CAFE (Conversational Agents Framework for Evaluation) by Christine Bauer (University of Salzburg), Li Chen, Nicola Ferro (University of Padua), and Norbert Fuhr provides a comprehensive approach for assessing conversational systems across multiple dimensions: relevance of course, but also assessing conversation flow, measuring user effort, trust building, and ultimately task completion effectiveness as a final score of the system’s usefulness.

Components of a CAFE evaluation

The CAFE framework's six-component approach (stakeholder goals, user tasks, user aspects, evaluation criteria, methodology, and measures) represents a maturation of how we think about conversational system evaluation. It acknowledges that different stakeholders (users, businesses, and researchers) have different success criteria, and that evaluation must account for this complexity.

LLM Factuality: when Models need to choose between Memory and Evidence 🧠

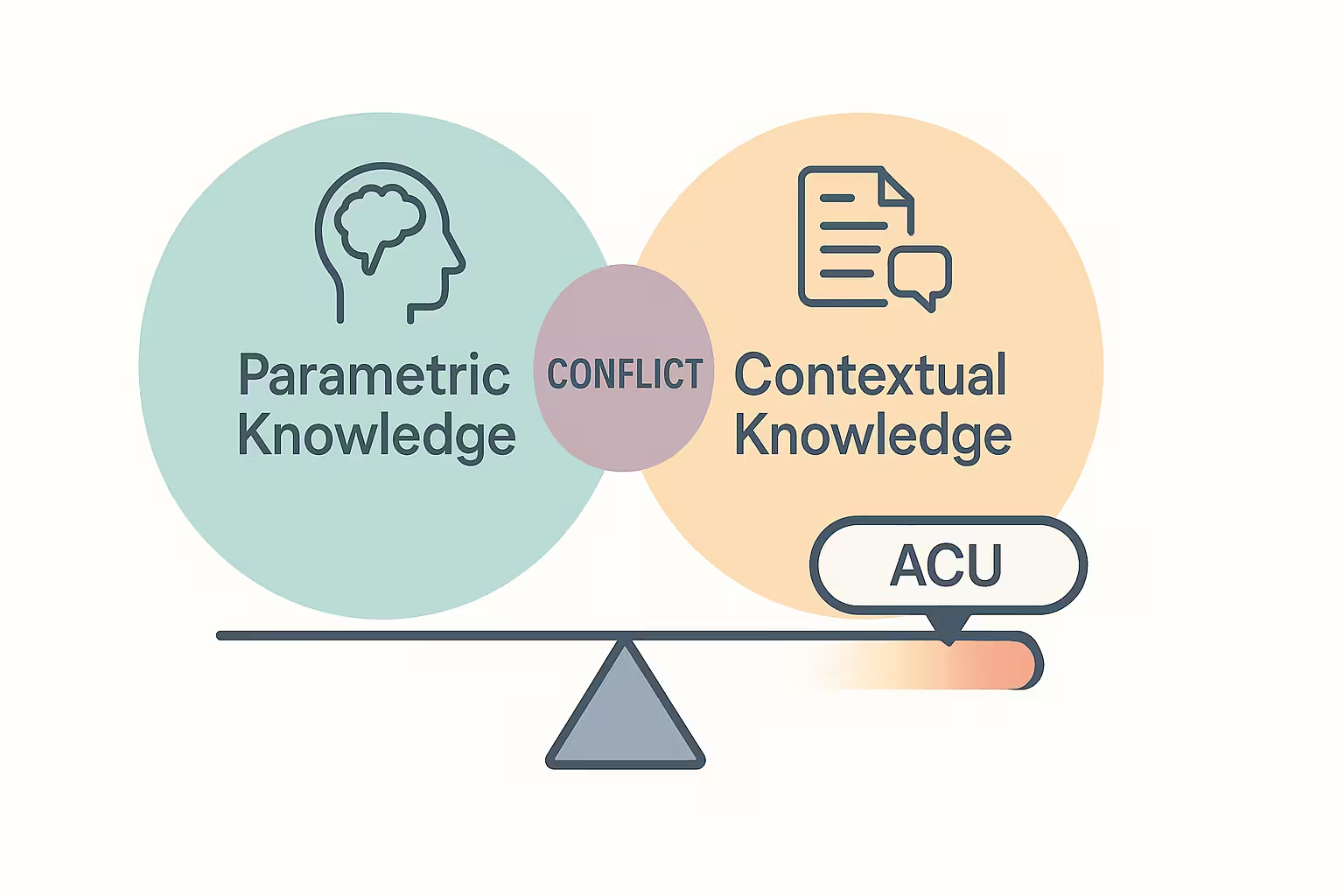

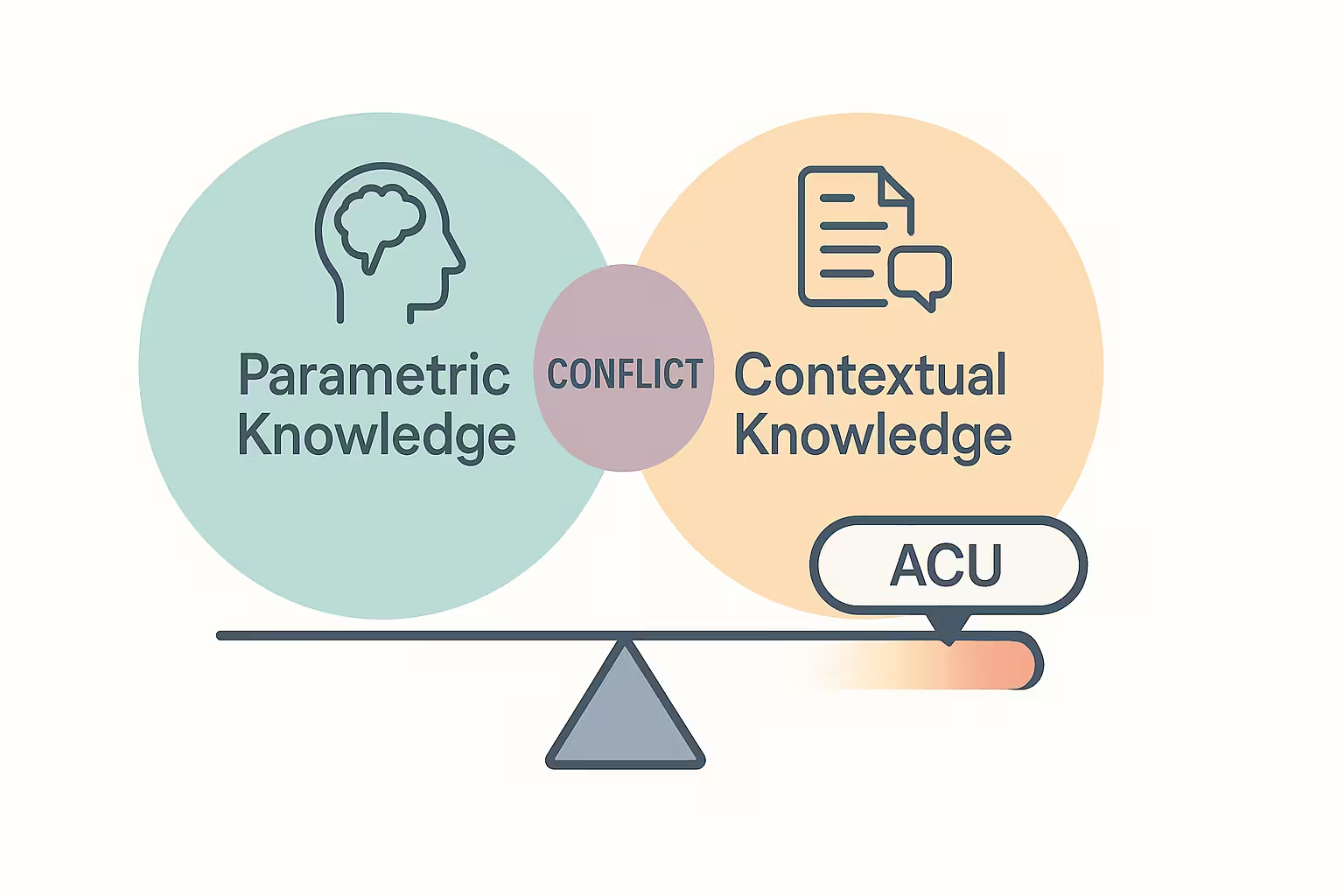

A very intellectually stimulating presentation was Isabelle Augenstein's keynote on LLM factuality, examining the tension between parametric knowledge (what models learned during training) and contextual knowledge (what we provide through retrieval). Dr. Augenstein, Professor at the University of Copenhagen and winner of the 2025 Karen Spärck Jones Award, is Denmark's youngest-ever female full professor and heads the Copenhagen Natural Language Understanding research group.

Her research reveals a surprising paradox: LLMs are surprisingly stubborn about certain types of facts, even when presented with contradictory evidence. The key insight seems to be fact dynamicity: models behave differently with static facts (unchanging truths), temporal facts (time-dependent information), and dynamic facts (frequently changing data).

What I found particularly compelling was Augenstein's work on attribution methods, identifying techniques for understanding which training instances or neural pathways influence specific model predictions.

The unified framework for attribution methods she presented offers both Instance Attribution (tracing predictions back to training examples) and Neuron Attribution (identifying important neural pathways).

This is not just cool research: we can expect real impact on RAG systems performance. When we build retrieval pipelines for an AI to answer user questions, we do need to understand whether retrieved context is relevant, but also whether the model will actually use it appropriately. Her research on the DRUID dataset shows that context-memory conflicts are less prevalent in real-world scenarios than synthetic benchmarks suggest, a reassuring result for all of us building production RAG systems!

The work introduces a new metric, ACU (Attention Context Usage), that measures how much models actually use provided context in their predictions. This gives us a quantitative way to understand when and why models prioritize their parametric knowledge over retrieved information.

Using ACU scores, we can quantify the relative impact of both sources of truth

Evaluation gets scientific: moving beyond Precision@K 📊

One encouraging trends at ECIR25 was that evaluation methods are becoming quite sophisticated. The field is moving beyond simple relevance metrics (Precision/Recall/F1 scores are still important, but never the whole picture), moving towards broader frameworks that capture what users actually care about. This is how we build not only state-of-the-art systems, but also products people want!

The Green IR initiative is also timely: by adding resource efficiency metrics (energy consumption, computational cost) alongside traditional effectiveness measures, we can assess if the tech we build has a net positive impact on the world around us and minimize the environmental cost of our technologies.

As the search systems we build scale to handle billions of queries, we can’t overlook understanding the environmental and economic impact of our work.

For RAG systems specifically, the community is developing standardized approaches for measuring more than just answer quality: assessing factual accuracy, source attribution, and response uncertainty. The RURAGE (Robust Universal RAG Evaluator) framework by Nikita Krayko, Ivan Sidorov, and colleagues, provides practitioners with concrete tools for continuous QA performance testing through ensemble features that combine lexical analysis, model-based assessments, and uncertainty metrics.

The shift from synthetic benchmarks to real-world evaluation datasets represents a maturation that's long overdue, as there’s always a gap between performance in the lab and performance in the field - something we've learned times and times over from optimizing search relevance in production environments.

The Momentum: we’re building with the same building blocks 🧱

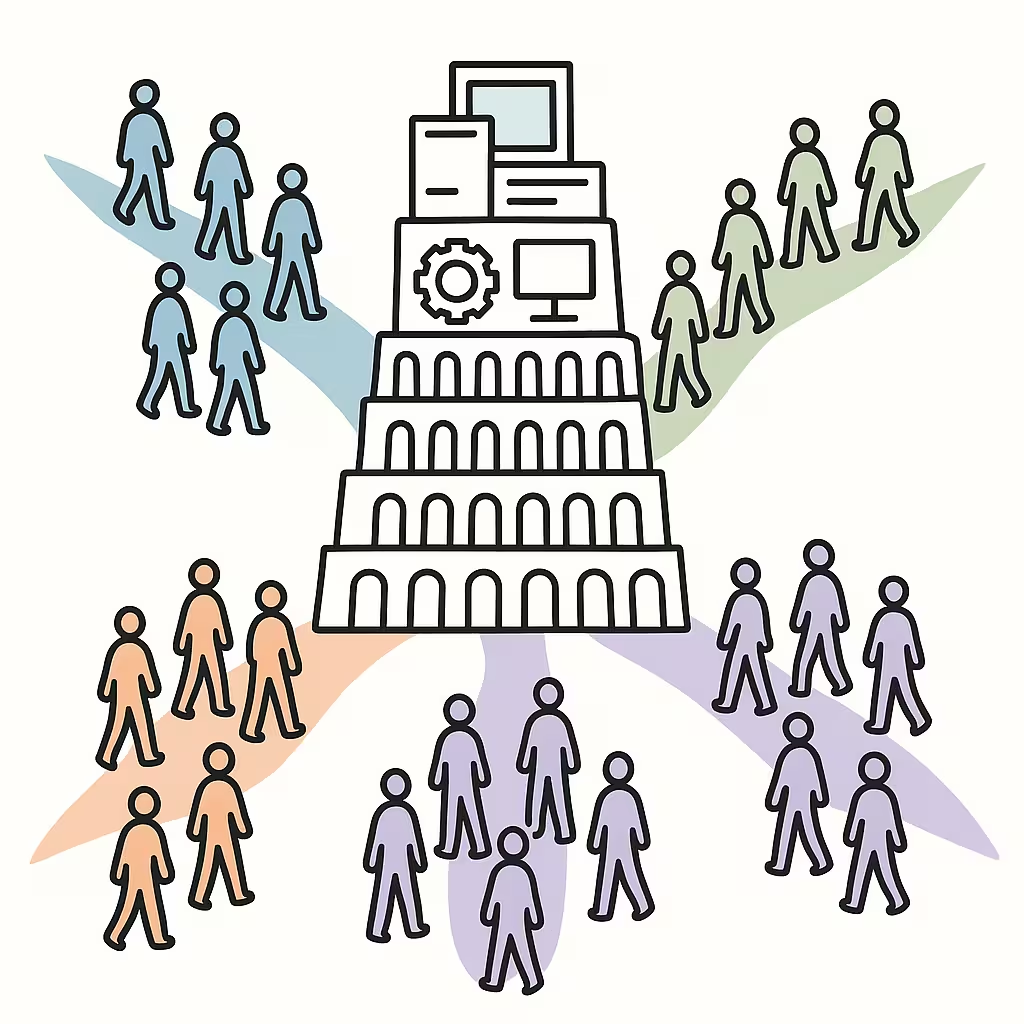

From our conversations with attendees, a consistent impression emerged: we can see how aligned the broader ecosystem has become around certain core capabilities. Whether in academic presentations, in poster discussions, or in industry demos, teams are converging on similar architectural patterns:

- Hybrid retrieval combining multiple signal types for ranking

- Context-aware query reformulation enabling multi-turn scenarios & use-cases

- Evaluation frameworks that go beyond traditional relevance metrics

- Attention to trustworthiness, bias detection, and factual accuracy

This convergence suggests we're moving from a period of experimentation on RAG and semantic search to one of standardization and scale. The fundamental building blocks are now well understood; the differentiation now lies in execution, integration, and the ability to deploy these capabilities at scale while maintaining quality and performance.

From “everyone does unique experiments” to “building the new AI stack together”

From Art to Science: The Industrialization of Conversational AI ⚙️

It was very exciting to notice at ECIR25 how conversational AI is going from an art to a science. Techniques that were previously the domain of research labs are being systematized, standardized, and scaled.

Dialogue management is moving from ad-hoc rules to principled frameworks with clear component architectures. The workshop presentations demonstrated modular approaches where intent detection, state tracking, query reformulation, and response generation can be independently optimized and composed.

Prompting strategies are evolving from intuitive trial-and-error to methodical optimization. Research on zero-shot clarification, few-shot learning for query reformulation, and chain-of-thought prompting provides concrete guidance for practitioners.

Personalization vs. exploration tradeoffs, a classic challenge in recommender systems, are now being rigorously studied in conversational contexts. How do you balance giving users what they expect with introducing them to new possibilities? The frameworks presented offer quantitative approaches to this eternal question (remember the Spotify Personalization talk by Mounia Lalmas at ECIR23?).

Looking Forward: The Work That Lies Ahead 🔮

The advances we saw at ECIR25 validate many of the technical directions our industry has been pursuing, from hybrid search to conversation management. They also highlighted challenges ahead:

- Trustworthiness and provenance tracking need systematic solutions. Users need to understand not just what information they're getting, but where it came from and how confident they should be in it.

- Real-time performance at quality remains a fundamental challenge. The most sophisticated techniques often require significant computational resources, making them work within acceptable latency budgets, while maintaining accuracy is an ongoing optimization problem.

- Multimodal integration is still in its early stages. While the potential is clear, building systems that seamlessly work across text, images, audio, and structured data requires solving numerous technical and UX challenges.

Why Academic Engagement Matters 🎓

Conferences like ECIR provide precious perspectives on where our field is heading.

They offer early signals about techniques that will become mainstream, challenges that need systematic solutions, and introduce evaluation methodologies that may well become industry standards.

For our team at Algolia, ECIR25 provided validation of several technical directions we've been pursuing, while opening our eyes to new possibilities we hadn't fully considered. The hybrid search approaches being developed align with our architecture decisions, while the advanced evaluation frameworks give us ideas for better measuring the impact of our improvements.

Perhaps most importantly, academic conferences connect us with the broader community of researchers and practitioners working on similar challenges. The informal conversations, workshop discussions, and chance encounters often prove as valuable as the formal presentations.

Wrapping Up: The Conversation Continues ☕️

ECIR25 left us optimistic about the direction of our field. The technology is maturing, the evaluation methods are becoming more sophisticated, and the applications are expanding beyond what we imagined just a few years ago - a trend we've been tracking closely in our own development.

The ecosystem moving from keyword-based search to conversational interfaces is a significant milestone: we are essentially refunding how humans interact with information. We're moving toward systems that don't just do retrieval and ranking, but engage in back-and-forth dialogue to understand and fulfill complex information needs of our users.

The work showcased in Lucca represents important steps toward that future. The techniques are becoming more principled, the evaluations more comprehensive, and the applications more ambitious. It's an exciting time to be working on these problems.

We'll be incorporating many of these insights into our ongoing research and development efforts. The conversation about conversational search is just getting started, and we're excited to be part of shaping where it goes next.

Thanks to the ECIR organizing committee, particularly the team at IMT Lucca led by Rector Lorenzo Casini, alongside organizers from CNR (National Research Council), University of Pisa, and University La Sapienza Rome, for hosting such a well-run conference. The three keynote speakers - Dr. Alon Halevy (Google Cloud Distinguished Engineer), Prof. Hannah Bast (University of Freiburg), and Prof. Isabelle Augenstein (University of Copenhagen) - provided remarkable insights spanning from GenAI capabilities in data management to efficient RDF databases and LLM factuality. Thanks to our Algolia colleagues Hugo Rybinski, Claire Helme-Guizon, Mihaela Nistor, Ana-Maria-Gabriela Lungu, and Ruxandra Voicu for the stimulating discussions, shared insights, and their contributions to this blog post.

I’m also grateful to work in a company where the leadership recognizes the value of engaging with the academic research community. These investments in understanding state-of-the-art techniques consistently pay dividends in our product development.

The future of search is conversational, and the future of conversation is already here. 🚀

Ready to chat about the future of chat-based search? Connect with me on LinkedIn to join the conversation about search technology and AI developments.

And if you find these challenges as exciting as we do and want to help shape the future of search and conversational AI at Algolia, DM me to discuss current openings on our team!

We're always looking for talented engineers who share our passion for making search more intelligent and intuitive.

AI Search

The results users need to seeAI Browse

Category and collection pages built by AIAI Recommendations

Suggestions anywhere in the user journeyAdvanced Personalization

Tailored experiences drive profitabilityMerchandising Studio

Data-enhanced customer experiences, without codeAnalytics

All your insights in one dashboardUI Components

Pre-built components for custom journeys